Text sources are often PDF’s. If optical character recognition (OCR) has been applied, the pdftools R package allows you to extract text from all PDFs to text files stored in a folder. The readtext package converts the set of text files into something useful for Quanteda. Nevertheless, some cleaning is necessary before transforming your text into a useful corpus. Here’s how.

First, you will need the abovementioned packages:

Extracting a txt from a PDF

The extraction needs a single funtion, pdf_text(“filepath_and_filename_of_your_PDF”) , provided by the pdftools package.

Identifying the encoding of the txt files

Next comes converting your extracted textfiles to a text dataframe. For this to work correctly, you need to identify the encoding of your source texts. Anything beside UTF is basically useless, unless you work on English texts only. If you are lucky, the texts will already be in UTF-8. Then you can import them like this.

txtdf <- readtext(your_file_path,encoding='UTF-8')

On Windows machines, chances are that pdf_text() will extract your texts in Latin1 encoding or worse, like Windows-1252. Luckily, readtext() converts any of these to UTF-8 when it imports, but you need to specify the encoding of the original texts, like this:

txtdf <- readtext(your_file_path,encoding='Latin1')

or like this:

txtdf <- readtext(your_file_path,encoding='Windows-1252')

The actual cleaning

The main part is accomplished with regular expressions (RegEx). An excellent site to test your expressions is regex101.com. str_replace_all is a function from the stringr package.

To remove hard-coded hyphenation:

str_replace_all(txtdf$text, "¬[\\s]+", "") str_replace_all(txtdf$text, "-[\\s]+", "")

To remove rubbish characters that are neither letters, numbers or punctuation symbols:

str_replace_all(txtdf$text, "[^[:alnum:].:,?!;]", " ")

To remove multiple whitespaces:

str_replace(gsub("\\s+", " ", str_trim(txtdf$text)), "B", "b")

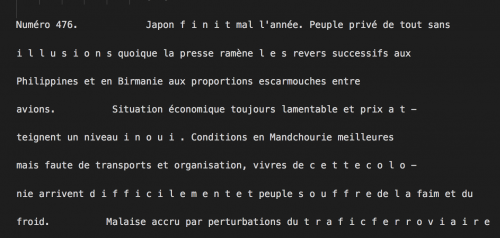

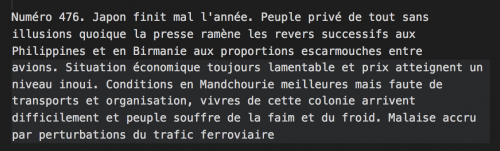

The most difficult is to join words that have been split in single characters, like “sans i l l u s i o n s quoique” to be replaced by “sans illusions quoique”. Their occurence is due to what seems as a bug of the current version of pdf_text() but can also occur naturaly in older scanned documents. Before computer-based word-processing with bold and itallic features, i.e. up to the 1990s, on writing machines, people used letter spacing a lot to emphasise parts of the text. To turn them back into complete words, RegEx’s look-behind (?<= … ) and negative look-forward (?! …) operators save the day:

str_replace_all(txtdf$text,"(?<=\\w\\s)(\\w)(\\s)(?!\\w\\w)","\\1")

And here goes the complete code:

library(pdftools)

library(readtext)

library(quanteda)

library(stringr)

# First, extract raw TXTs from PDFs.

# To extract a whole directory, you need to build a function:

extracttext <- function (filename) {

print(filename)

txt <- paste("Extraction failed. File size : ", file.size(filename), " bytes") # catches extraction problem due to very large pdfs.

try({ txt <- pdf_text(filename) })

f <- gsub("(.*)/([^/]*).pdf", "\\2", filename)

print(f)

write(txt,file.path(exportpath,paste0(f,".txt")))

}

dir <- "the_path_to_your_directory_containing_PDFs"

exportpath <- "the_path_to_the_directory_where_you_want_to_export_your_TXTs"

if (!dir.exists(file.path(exportpath))) dir.create(file.path(exportpath))

# Make a list of the PDFs you want to convert:

fileswithdir <- list.files(dir,pattern=".pdf",recursive=FALSE,full.names = TRUE)

# And run the function on all of them:

for (file in fileswithdir) {extracttext(file)}

# Now read your whole directory with TXTs as a readtext object.

# UTF-8 is default encoding but sometimes, especially on Windows machines, you are dealing with Latin1 or worse.

# Verify the encoding of your text and adapt the following line accordingly:

txtdf <- readtext(your_file_path,encoding='UTF-8')

# remove hard-coded hyphenation:

txtdf$text <- str_replace_all(txtdf$text, "¬[\\s]+", "")

txtdf$text <- str_replace_all(txtdf$text, "-[\\s]+", "")

# remove rubbish characters:

txtdf$text <- str_replace_all(txtdf$text, "[^[:alnum:].:,?!;]", " ")

# remove multiple whitespaces:

txtdf$text <- str_replace(gsub("\\s+", " ", str_trim(txtdf$text)), "B", "b")

# Join words that have been split in single characters, like

# "sans i l l u s i o n s quoique" by "sans illusions quoique":

txtdf$text <- str_replace_all(txtdf$text,"(?<=\\w\\s)(\\w)(\\s)(?!\\w\\w)","\\1")

# Then you can convert the readtext data.frame to a corpus and you are ready to analyze:

txtc <- corpus(txtdf)

No, this code is only for repairing an already existing result of OCR. To perform OCR on PDF files with R, I suggest the tesseract package : https://cran.r-project.org/web/packages/tesseract/vignettes/intro.html

Can we use this software to extract image OCR data from a PDF file? Is that possible?